Obiettivi | Certificazione | Contenuti | Tipologia | Prerequisiti | Durata e Frequenza | Docenti | Modalità di Iscrizione | Calendario

Il Corso Building Data Lakes on AWS (ANBDLK) è progettato per i partecipanti che desiderano imparare a progettare, costruire e gestire un data lake su AWS. I partecipanti impareranno come costruire un data lake operativo che supporti l’analisi di dati sia strutturati che non strutturati. Si acquisiranno le componenti e le funzionalità dei servizi coinvolti nella creazione di un data lake. Si utilizzerà AWS Lake Formation per creare un data lake, AWS Glue per creare un catalogo di dati e Amazon Athena per analizzare i dati. Il corso contribuisce alla preparazione per la Certificazione AWS Certified Data Analytics – Specialty.

Contattaci ora per ricevere tutti i dettagli e per richiedere, senza alcun impegno, di parlare direttamente con uno dei nostri Docenti (Clicca qui)

oppure chiamaci subito al nostro Numero Verde (800-177596)

Obiettivi del corso

Di seguito una sintesi degli obiettivi principali del corso Corso Building Data Lakes on AWS (ANBDLK):

- Imparare a progettare, costruire e gestire un data lake operativo su AWS che supporti l’analisi di dati sia strutturati che non strutturati.

- Acquisire conoscenza delle componenti e delle funzionalità dei servizi AWS coinvolti nella creazione di un data lake.

- Utilizzare AWS Lake Formation per creare un data lake, facilitando la configurazione, la sicurezza e la gestione del data lake.

- Impiegare AWS Glue per creare un catalogo di dati, facilitando la scoperta e la preparazione dei dati per l’analisi.

- Utilizzare Amazon Athena per analizzare i dati, permettendo di eseguire query SQL direttamente sui dati memorizzati nel data lake.

Certificazione del corso

Esame AWS Certified Data Analytics – Specialty;

L’esame di certificazione AWS Certified Data Analytics – Specialty (DAS-C01) è pensato per valutare le competenze avanzate dei candidati nella progettazione, implementazione e gestione di soluzioni di analisi dei dati su AWS. L’esame copre tematiche come la raccolta, il processamento e l’analisi di grandi quantità di dati, utilizzando servizi AWS come Kinesis, S3, Redshift e EMR.

L’obiettivo principale è assicurare che i candidati dimostrino una solida conoscenza delle best practice e delle soluzioni avanzate AWS per l’analisi dei dati. Durante l’esame, i candidati affronteranno argomenti quali l’integrazione di servizi AWS e di terze parti per l’analisi dei dati, l’ottimizzazione delle prestazioni e l’implementazione di soluzioni di sicurezza.

Contenuti del corso

Module 1: Introduction to data lakes

- Describe the value of data lakes

- Compare data lakes and data warehouses

- Describe the components of a data lake

- Recognize common architectures built on data lakes

Module 2: Data ingestion, cataloging, and preparation

- Describe the relationship between data lake storage and data ingestion

- Describe AWS Glue crawlers and how they are used to create a data catalog

- Identify data formatting, partitioning, and compression for efficient storage and query

- Lab 1: Set up a simple data lake

Module 3: Data processing and analytics

- Recognize how data processing applies to a data lake

- Use AWS Glue to process data within a data lake

- Describe how to use Amazon Athena to analyze data in a data lake

Module 4: Building a data lake with AWS Lake Formation

- Describe the features and benefits of AWS Lake Formation

- Use AWS Lake Formation to create a data lake

- Understand the AWS Lake Formation security model

- Lab 2: Build a data lake using AWS Lake Formation

Module 5: Additional Lake Formation configurations

- Automate AWS Lake Formation using blueprints and workflows

- Apply security and access controls to AWS Lake Formation

- Match records with AWS Lake Formation FindMatches

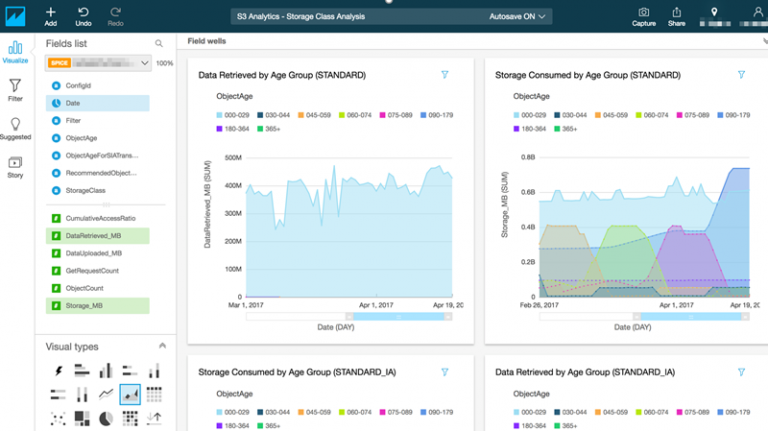

- Visualize data with Amazon QuickSight

- Lab 3: Automate data lake creation using AWS Lake Formation blueprints

- Lab 4: Data visualization using Amazon QuickSight

Module 6: Architecture and course review

- Post course knowledge check

- Architecture review

Tipologia

Corso di Formazione con Docente

Docenti

I docenti sono Istruttori accreditati Amazon AWS e certificati in altre tecnologie IT, con anni di esperienza pratica nel settore e nella Formazione.

Infrastruttura laboratoriale

Per tutte le tipologie di erogazione, il Corsista può accedere alle attrezzature e ai sistemi presenti nei Nostri laboratori o direttamente presso i data center del Vendor o dei suoi provider autorizzati in modalità remota h24. Ogni partecipante dispone di un accesso per implementare le varie configurazioni avendo così un riscontro pratico e immediato della teoria affrontata. Ecco di seguito alcuni scenari tratti dalle attività laboratoriali:

Dettagli del corso

Prerequisiti

Si consiglia la partecipazione al Corso AWS Technical Essentials e al Corso Data Analytics Fundamentals.

Durata del corso

Durata Intensiva 1gg.

Frequenza

Varie tipologie di Frequenza Estensiva ed Intensiva.

Date del corso

- Building Data Lakes on AWS (Formula Intensiva) – Su Richiesta – 09:00/17:00

Modalità di iscrizione

Le iscrizioni sono a numero chiuso per garantire ai tutti i partecipanti un servizio eccellente. L’iscrizione avviene richiedendo di essere contattati dal seguente Link, o contattando la sede al numero verde 800-177596 o inviando una richiesta all’email [email protected].